Network Congestion: An Analysis of the Ramses Central Fire and Its Impact on the Internet

On the evening of July 7, 2025, Egypt experienced a widespread internet outage that significantly affected users across the country. The disruption was triggered by a fire that broke out in the Ramses Central Exchange building, located in downtown Cairo. This facility serves as a critical hub of Egypt’s telecommunications infrastructure, housing essential equipment and networks that manage the flow of data and communications nationwide.

This paper provides a technical overview and analysis of the internet outage that occurred in Egypt on July 7, 2025, with a focus on its timing, scope, and impact on the network infrastructure. The analysis relies on data from two primary platforms dedicated to monitoring and investigating internet disruptions:

- IODA (Internet Outage Detection and Analysis): A research project run by the Georgia Institute of Technology that aims to monitor and analyze large-scale internet outages in real time. IODA leverages data from multiple sources, including BGP (Border Gateway Protocol), as well as active and passive measurement techniques, to detect and document outages affecting different networks and geographic regions.

- Cloudflare Radar: A platform that provides insights and analytics on global internet traffic, connection quality, and outages. It utilizes data collected by Cloudflare from its extensive network of servers and users, allowing for accurate and reliable tracking of network changes and disruptions.

This paper draws on data recorded between Monday, 7 July 2025, and Tuesday, 8 July 2025, up to 2:00 p.m. Cairo time. This period was selected as it captures the moment the fire erupted and the progression of the outage over more than 20 hours, allowing for a detailed chronological analysis of the crisis.

The Central Role of the Ramses Exchange and Network Congestion

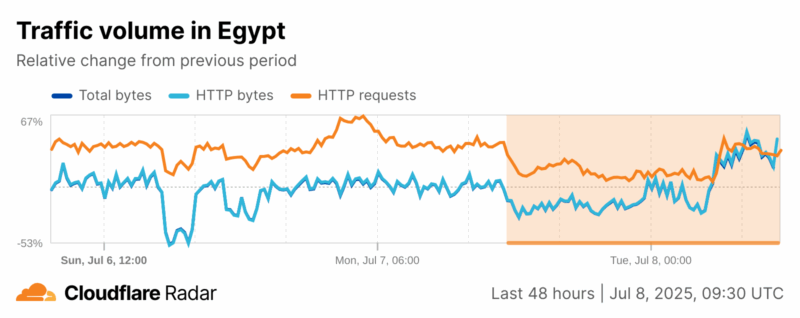

According to data provided by Cloudflare Radar, a global internet traffic monitoring platform, the impact of the fire on Egypt’s internet traffic was almost immediate. The platform detected a notable drop in the volume of data flowing through the Egyptian network. This decline was not confined to a single company or service provider; instead, it affected several providers simultaneously—reflecting the critical role of the Ramses Central Exchange in distributing internet traffic across the country.

At 5:30 p.m. Cairo time, network indicators showed a 12% decrease in total data throughput and an 11% decrease in web browsing traffic (HTTP). Despite this drop in transmitted data, browsing requests remained elevated by 31%. This disparity suggests that users and applications continued to attempt to access the internet but were unsuccessful, as though there was a “choke point” preventing data from passing through, despite ongoing connection attempts.

The outage reached its first peak around 6:15 p.m., about 45 minutes after the fire broke out, with data volumes dropping by 27%—the highest recorded level during the incident. The network experienced a brief partial recovery, but the decline resurfaced at 11:30 p.m. the same day, registering a 25% drop.

The persistence of the disruption for several hours indicates that the damage was neither superficial nor short-lived; rather, it likely affected critical components of the infrastructure, making service restoration a complex process. The fact that this decline occurred simultaneously across multiple service providers supports the hypothesis that the Ramses Central Exchange served as a central hub upon which various networks depended, its failure leading to widespread disruption.

By the early hours of Tuesday, indicators showed gradual improvement. At 2:15 a.m., there was an 8.5% increase in total data throughput and a 9.2% rise in web traffic. However, this recovery was unstable, as indicators continued to fluctuate in the following hours.

At 5:15 a.m., a new decline of 23% was recorded, followed by a significant rebound at 7:45 a.m., when total data rose by 27%, web traffic by 29%, and browsing requests by 41%. This rebound suggests that some parts of the network had been restored, though it did not signify a full return to normalcy.

By 10:00 a.m., data volumes were still below their usual levels, with only modest gains: a 19% increase in total data, a 20% increase in web traffic, and a 31% increase in browsing requests. Despite these improvements, Cloudflare continued to classify the situation as an “active outage,” indicating that the crisis was not yet resolved, more than 17 hours after the fire began.

Graphs provided by Cloudflare clearly demonstrate that the outage was prolonged rather than momentary, and the restoration of service was uneven, marked by repeated disruptions and fluctuations. This pattern strengthens the likelihood that the fire caused damage to critical physical components inside the exchange or created issues in the interconnections between different service providers.

It is also notable that browsing requests—users’ attempts to reach websites and services—remained consistently high throughout the crisis, even when data transmission volumes were extremely low. This reflects the presence of a “bottleneck” within the local network: requests were reaching the network but were not being processed or responded to properly, indicating internal network congestion rather than a disruption caused by undersea cables or international data centers.

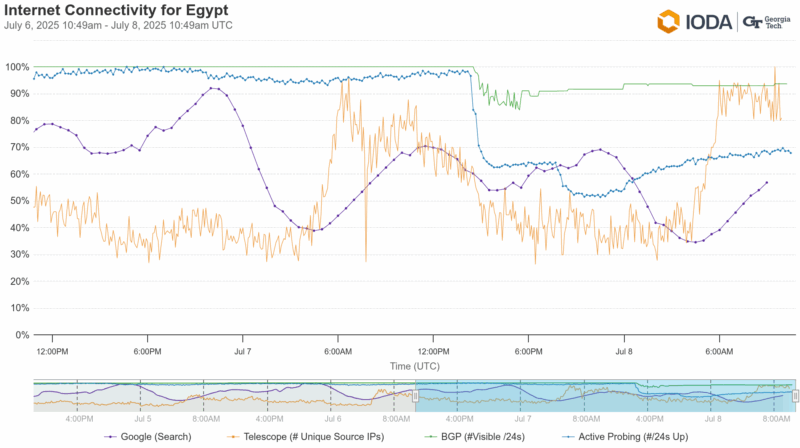

The data recorded by IODA reinforces the findings revealed by Cloudflare Radar regarding the timing and severity of the outage. At approximately 5:30 p.m., IODA’s indicators showed that Egypt’s internet was experiencing a significant disruption, with numerous networks becoming inaccessible from outside the country.

At the same time, users found it increasingly difficult to access services such as search engines and email, reflecting a genuine difficulty in maintaining connections with the outside world. Although some infrastructure-related indicators remained relatively stable, this suggests that the cause was likely a sudden physical failure, consistent with the outbreak of the fire at the Ramses Central Exchange.

IODA’s data confirms that the outage was far more than a minor technical glitch; it was a serious technical crisis that lasted for several hours and disrupted large segments of the network.

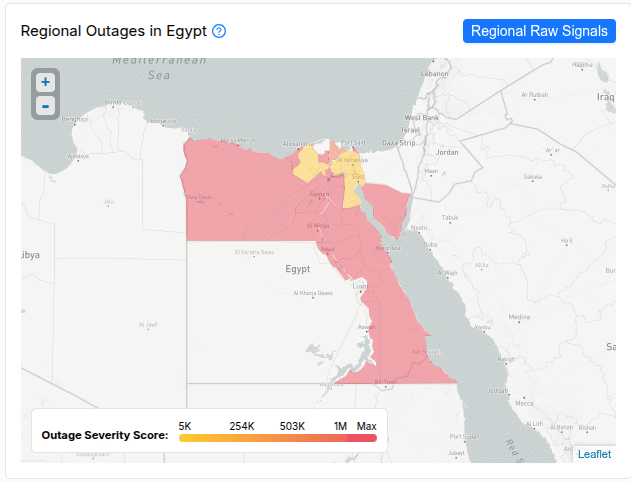

This analysis also highlights the geographic dimension of the outage, as shown in a map released by IODA that illustrates the spread of the crisis across Egypt’s governorates. The map reveals that the disruption was most severe in major governorates such as Cairo, Giza, and Alexandria, while also affecting vast areas across the Delta, Upper Egypt, and Sinai. The map uses a color scale ranging from yellow to deep red, indicating that the damage was not confined to a single region but extended nationwide.

These findings underscore Egypt’s heavy reliance on a traditional, centralized telecommunications infrastructure and demonstrate how a single incident—such as a local fire—can have far-reaching impacts on internet services in the absence of effective alternatives or backup routes. They also support the conclusion that the Ramses Central Exchange functioned as a pivotal hub for data distribution, where the failure of just one central node can produce cascading effects across areas with no direct geographic connection to the fire’s location.

Furthermore, the slow pace of service restoration highlights weaknesses in emergency response protocols and the distribution of digital infrastructure, underscoring the need for a comprehensive review of network management and distribution strategies to ensure greater resilience and stability in times of crisis.

Networks Under Pressure: An Ordinary Day Turns into a Crisis

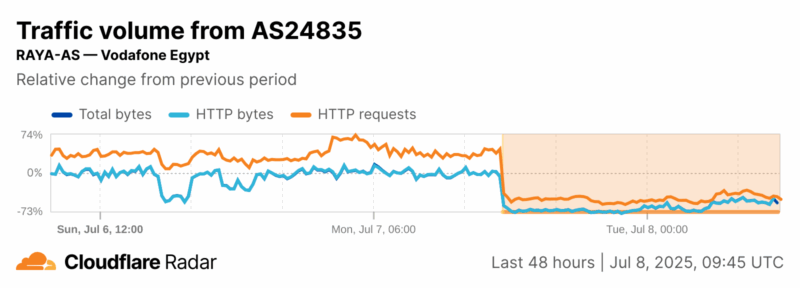

Vodafone Network

Until midday on Monday, Vodafone’s internet service was operating normally. The three key indicators monitoring the network’s performance—data volume transmitted and received, browsing traffic, and the number of user requests to access websites or services—all remained within their usual daily ranges. In fact, at 4:45 p.m., the number of browsing attempts rose sharply by 47% above the typical daily level; yet, this surge in demand did not cause any strain on the data volume being transmitted, indicating that the network was handling the increased load without issue.

About half an hour later, at 5:15 p.m., the first signs of trouble appeared on Vodafone’s network. The volume of data passing through the network began to decline by roughly 4%, signaling deteriorating performance.

At the same time, the number of user attempts to reach online services continued to rise, reaching 48% above the normal level. This mismatch—persisting demand alongside a falling data flow—suggested that devices were still attempting to load websites and data, but the network could no longer respond effectively, indicating an internal malfunction that was starting to escalate.

By 5:30 p.m., at the exact moment the fire broke out at the Ramses Central Exchange, Vodafone’s internet traffic collapsed abruptly. According to Cloudflare Radar, the volume of data transmitted across the network plunged by 58%, both in total traffic and in web browsing data.

At the same time, the volume of user requests to access online services also fell by 35%. Cloudflare classified this sudden change as a sign of a major outage, quickly labeling the situation an “active outage,” indicating that Vodafone was experiencing a severe, prolonged service disruption—one that persisted for several hours.

The situation worsened as the evening progressed. By 9:00 p.m., approximately three and a half hours after the collapse began, data volumes had decreased by 66%, while the number of user requests to access or exchange information had dropped by 46%. By midnight, the decline had reached its lowest point, with a 71% drop in data volume and a 56% drop in user requests. This meant that Vodafone’s internet service was virtually paralyzed, with most users unable to access the internet in any meaningful way.

In the early hours of Tuesday, 8 July, signs of partial recovery began to emerge. At 4:15 a.m., data volumes had improved slightly, though they remained far below normal levels—the network was still 53% below its usual data volume, and user browsing requests were down by 46%. This partial recovery progressed slowly, with metrics remaining at roughly the same diminished levels until 11:00 a.m., leaving users facing significant difficulties accessing the internet. The problem was clearly far from resolved.

Throughout the entire period, Cloudflare continued to classify the situation as an “active outage.” The orange-shaded areas on the platform’s charts represent the duration of the disruption, while the curves clearly indicate that the collapse was sudden and nearly total, followed by a slow and unstable recovery phase.

The synchronized decline in both data volume and user requests demonstrates that the issue was not merely a temporary slowdown or momentary congestion but rather a complete breakdown of core communication routes. This scenario strongly suggests that a critical segment of Vodafone’s infrastructure relied on equipment housed within the Ramses Central Exchange. When that infrastructure was damaged, it became impossible to activate alternate routes or failover mechanisms, resulting in prolonged network paralysis.

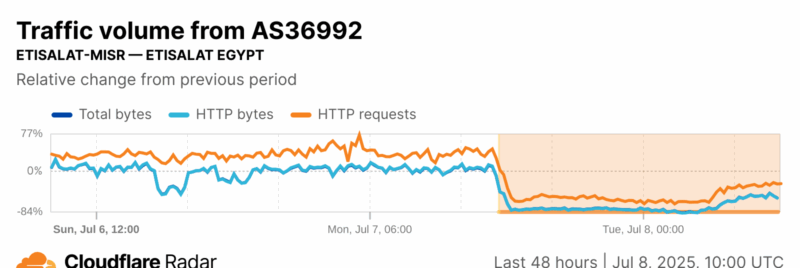

Etisalat Network

Data from Cloudflare shows that Etisalat Misr’s network was operating normally and stably through the early hours of Monday, with no evident technical issues. The volume of data passing through the network was within expected levels, and there was even a noticeable increase in user activity. At 5:00 p.m., the number of user attempts to browse the internet rose by 48% above the normal daily average, while the volume of data being downloaded or browsed increased by approximately 12–13%. These figures indicate that the network was experiencing high traffic without any apparent technical problems.

This situation changed dramatically within just a few minutes. At 5:30 p.m., the same time the fire broke out, Etisalat’s network began to lose its ability to function effectively. According to Cloudflare’s data, the volume of data transmitted across the network plummeted suddenly by 41%, while web browsing traffic fell by 40%.

What stands out is that the number of user attempts to connect to the internet remained high, even increasing by 4.8%. This means that people continued trying to use the internet as usual. Still, the network was unable to respond to those requests, indicating the onset of a sudden collapse in the network’s capacity to handle demand.

The network reached its worst state at 6:15 p.m., when data volumes dropped sharply by 78%, and the rate of successful browsing requests fell by 52%. This meant that Etisalat’s customers could barely access digital services at all, as though the network had become almost completely disabled, resulting in a near-total paralysis of service.

The severe outage persisted throughout the evening with no apparent signs of improvement. By 9:00 p.m., data volumes were still down by 75%, and the number of browsing attempts had fallen by 50% compared to normal levels.

Conditions deteriorated further at 1:45 a.m., when the network showed a 77% drop in total data traffic and a 60% decline in browsing requests. These figures confirmed that the network had not regained its stability, and Etisalat’s customers continued to face major difficulties accessing the internet, with no significant recovery in sight.

By the morning of Tuesday, 8 July, there were still no signs of a full-scale recovery. At 6:00 a.m., the data showed that internet traffic was at its lowest point yet, with data transmission down by 81%, web browsing by 82%, and the number of user requests down by 62%. These levels were among the worst recorded during the entire crisis, showing that most users were effectively unable to access the internet.

Even by 11:15 a.m., more than 17 hours after the fire began, the network remained in a fragile state, with data volumes still reduced by 43% and browsing requests down by 21%. For this reason, Cloudflare continued to classify the situation as an “active outage,” indicating that the connection remained highly unstable.

This timeline of indicators shows that Etisalat Misr’s network suffered a sharp and sudden collapse that lasted for many hours, with only slow and insufficient partial recovery attempts. The disruption was not caused by temporary slowdowns or brief congestion, but by a structural failure at key interconnection points within the network, which resulted in a loss of connectivity to online services.

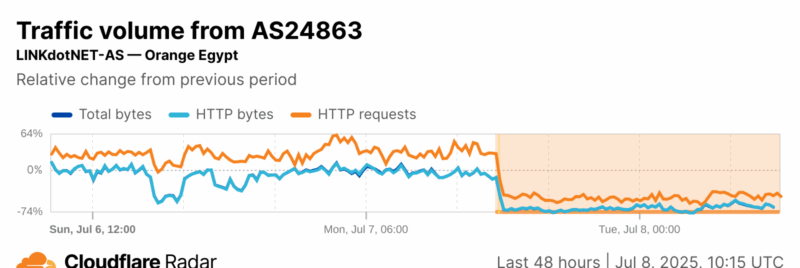

Orange Network

Data from Cloudflare shows that the Orange network was operating normally and stably through Monday afternoon. During this time, internet usage appeared typical in terms of both the volume of data consumed by users and the number of attempts to access websites or applications.

At 4:00 p.m. Cairo time, the number of browsing attempts rose by 32% compared to the usual baseline, indicating a period of notable user activity. However, there was no significant increase in the actual volume of data transmitted, which remained within normal limits. This suggests that users were connected to the internet without experiencing any issues or slowdowns at that point.

At 5:30 p.m., coinciding with the outbreak of the fire at the Ramses Central Exchange, Orange’s network experienced a sudden and severe collapse in performance. The volume of data flowing through the network dropped by 69%, with web browsing traffic declining by roughly the same percentage. Additionally, the number of user attempts to access websites fell by 42%. This sharp and abrupt drop indicates that internet service on Orange’s network began to fail almost immediately, leaving users facing serious difficulties accessing online services from that moment onward.

The situation deteriorated further after the initial collapse. By 9:00 p.m.—approximately three and a half hours into the outage—data volumes had dropped by 70%. At the same time, user browsing attempts fell by 51%, demonstrating that internet access on Orange’s network was nearly paralyzed.

The disruption persisted throughout the night and into the morning of Tuesday, 8 July. By midnight, there was little improvement, with data transmission still down by 62% and browsing requests reduced by 52% compared to normal levels.

Even by 9:00 a.m., roughly 15 hours after the outage began, no meaningful recovery had been recorded: data volumes remained between 56% and 61% below normal, while browsing requests were still reduced by 36–54%. These figures indicate that many users were still unable to access the internet even after several hours had passed.

As with Vodafone and Etisalat, this data illustrates the dependence of Orange’s network on the damaged infrastructure housed at the Ramses Central Exchange, exposing the fragility of Egypt’s internet infrastructure—particularly in the absence of effective technical solutions or redundancies to bypass major failures.

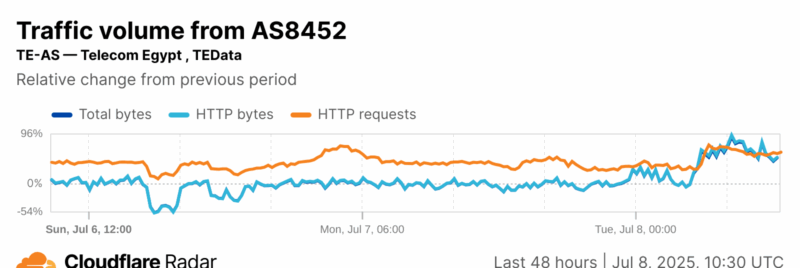

WE Network

Data from Cloudflare indicates that the WE network had been experiencing fluctuations even a full day before the fire. On the afternoon of Sunday, 6 July, the volume of data transmitted across the network decreased by approximately 54% compared to the reference period, before indicators began to recover gradually on Monday morning. This suggests that the network was already unstable before the incident.

By 5:30 p.m. on Monday, the time the fire broke out, early signs of disruption were already apparent on the WE network. Data transmission declined by 3.5%, while web browsing traffic fell by 3.1%. In contrast, the number of user requests remained high, increasing by 41%. This disparity suggests that users were attempting to access websites and services but were not receiving adequate responses from the network.

At 6:15 p.m., more pronounced signs of performance degradation emerged, as data transmission dropped by 13%, yet browsing requests stayed elevated at 35% above normal levels. This indicates that users continued to attempt to access the internet, but the service had become slower or less stable than usual.

By 9:00 p.m., the network began to show relative signs of improvement. Data transmission increased by 7.6%, web traffic rose by 8.2%, and browsing requests climbed to 49% above normal, suggesting that some parts of the network were gradually recovering, possibly due to technical interventions or rerouting of traffic through alternative paths.

At midnight on Tuesday, 8 July, the pace of recovery accelerated, with data volumes increasing by 22%, while requests remained elevated at 37% above normal levels. This development shows that the network was approaching normal operating conditions.

Starting at 6:15 a.m., the network experienced a significant surge in performance: data transmission increased by 93%, web browsing traffic by 96%, and browsing requests by 69%. This indicates that the network had not only returned to normal but had surpassed its typical levels, likely due to pent-up user activity after the period of disruption.

By 8:15 a.m., performance indicators continued to record high levels, with data volumes up 81%, web traffic up 83%, and browsing requests up 68%. By 10:15 a.m., the network was operating stably, indicating that the service had nearly returned to normal.

These findings show that WE’s network did not experience a complete outage like those seen in Vodafone, Etisalat, and Orange, which were heavily affected by the fire. However, WE did suffer from notable partial disruptions, particularly during the second half of Monday.

What the Ramses Central Fire Revealed

The data presented in this paper demonstrate how the fire that broke out at the Ramses Central Exchange on July 7, 2025, led to a widespread internet outage in Egypt. Although the incident was localized geographically, its impact extended to all major service providers, causing partial or complete paralysis of internet traffic across numerous governorates for many hours. Indicators revealed that networks such as Vodafone, Etisalat, Orange, and WE were—albeit to varying degrees—dependent on the damaged infrastructure housed in the exchange, leaving them unable to contain the crisis effectively.

This incident underscores the urgent need for a comprehensive review of Egypt’s digital communications infrastructure. It highlights the importance of reducing reliance on single points of concentration, enhancing network resilience through distributed infrastructure and the activation of alternative routes, and establishing emergency response plans supported by robust technical and logistical resources.

The sudden outage exposed the vulnerability of the current system, demonstrating how a single localized incident can disrupt essential services that affect the lives of individuals and institutions—at a time when digital communications have become a cornerstone of both the economy and society. Unless serious investments are made to strengthen the resilience of the infrastructure, the risk of future disruptions will remain high, whether caused by natural disasters, technical failures, or even cyberattacks.